As mentioned in prior blogs, NexentaEdge is an Object Cluster that uses multicast for internal communications. Specifically, we use multicast IPv6 UDP datagrams to create, replicate and retrieve Objects.

Creating replicas of object payload on multiple servers is an inherent part of any storage cluster, which makes multicast addressing very inviting. The trick is in doing reliable transfers over unreliable protocols.But there are numerous projects that have already proven this possible. I was familiar with reliable MPI rendezvous transfers being done over unreliable InfiniBand. There was no need to set up a Reliable Connection, once the rendezvous is negotiated the probability of a dropped datagram is nearly zero.

The TCP checksum is useless for validating storage transfers anyway. A comparison of a hash checksum of the received data is needed to protect data – sixteen bits of error detection isn’t enough for petabytes of storage. If the ultimate accept/reject test has nothing to do with the transport protocol then the only question is whether the probability of losing any single datagram is sufficiently low that you don’t care if on extremely rare occasions you have to retransmit the entire chunk as opposed to specific TCP segments. When the probability of a single drop is low enough it is actually better to send fewer acks.

But an ack-minimizing strategy depends on there being very few drops. Modern ethernet has extraordinarily few transmission errors. Drops are almost exclusively caused by network congestion. With sufficient congestion control “unreliable” transport protocols become effectively reliable. This can be built from low level “no-drop” Ethernet combined with a higher layer application protocol.

The focus of this blog, however, is on formation of multicast groups. As first drafted our Replicast transport protocol uses pre-existing “Negotiating Groups” of 6 to 20 nodes to select the targets (typically 3 or less) of a specific transactional multicast. We called the dynamically created group a “Rendezvous Group”.

However, the existing multicast group control protocols (IGMP and MLD) are not suitable for our storage application. The latency on joining a group can be longer than the time it takes to write the chunk.

The only type of cluster I have been involved in developing is a storage cluster, but I have worked with supporting high performance computing clusters (especially MPI) while working at the pure network level. My sense of those applications is that a source knowing the set of targets that should get a specific message is common. It is very hard to imagine that this need is unique to our specific storage application. There must be many cluster applications that would benefit from multicast transmission of transactional messages.

For example, I can recall a lot of MPI applications that were using RDMA Reads to fetch data, and those were not point-to-point fetches. I suspect that a reliable multicast push would have matched the application needs more closed than RDMA Reads.

The problem is that IGMP/MLD joins simply do not meet the needs for transactional data distribution.

Not only was the latency terrible, but the transaction is wrong. In our application it is the sender who knows the set of targets to be addressed. With IGMP/MLD we have to first multicast to the Negotiating Group what set of targets we need to join the Rendezvous Group. So we’re adding a cluster trip time snd worst case kernel latency to the already bad latency in most IGMP/MLD implementations.

What we needed was the ability to quickly set the membership of a Rendezvous Group for the purposes of setting up a single rendezvous delivery. We only care about the sender identified receivers who receive the entire set of datagrams in the transaction.

However, this all depends on being able to quickly configure multicast groups.

The solution we came up is mind-numbingly simple.

It was apparently too mind-numbingly simple because we kept getting blank stares from numbed minds. The experts who had worked on the existing solutions kept mumbling things about their solution while only vaguely conceding that those solutions do not work for our application.

At the core of the problem is that they were focusinsetg on long-haul L3 multicasting. What we wanted was local L2 multicasting.

To be precise, what would be required for our preferred solution is extremely simple. The enhancements are at the same layer as the IGMP/MLD snooper that sets up the L2 forwarding rules. Handling of L2 frames is not impacted at all. What we need this control plane routine to do in each switch iof the desired scope is to execute the following command:

- Set the forwarding portset for Multicast Group X in VLAN Z to be a subset of the forwarding portset for Multicast Group Y in VLAN Z.

- The specific subset must is the union of the forwarding Portset for a set of unicast addresses in VLAN Z: Addr1, Addr2, … The unicast addresses may be specified as L2 MAC addresses or L3 IP addresses, but they must be within the same VLAN.

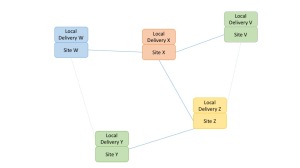

The key is that this algorithm is executed on each switch. Consider a three switch cluster, with an existing Negotiating Group X which has members X1 through X9. We want to configure a Rendezvous Group Y that will multicast to X1, X4 and X8.

WIth the above example the following forwarding rows will exist on the three switches:

| Rule | SA Portset | SB Portset | SC Portset |

| Unicast to X1 | 2 | SA | SA |

| Unicast to X3 | 7 | SA | SA |

| Unicast to X3 | SB | 4 | SB |

| Unicast to X4 | SB | 5 | SB |

| Unicast to X5 | SB | 12 | SB |

| Unicast to X6 | SB | 14 | SB |

| Unicast to X7 | SC | SC | 3 |

| Unicast to X8 | SC | SC | 7 |

] Unicast to X9 | SC | SC | 10 |

| Multicast to X1-X9 | 2,7,SB,SC | SA,4,9,11,14,SC | SA,SB,7,10 |

| Multicast to X1,X4,X8 | 2, SB, SC | SA, 5, SC | SA, SB, 7 |

The last row (Multicast to X1, X4 and X8) can be formed from data each switch already has, each applying the same instruction to that data.

That’s it. Just execute that instruction on each switch and the subset multicast group will be created. It doesn’t even have to be acknowledge, after all the UDP datagrams are not being individually acknowledged either. The switch set up and the follow-on rendezvous transfer will be confirmed by the cryptographic hash of the delivered payload. It either matches or it does not.

I have submitted an IETF draft: https://tools.ietf.org/html/draft-bestler-transactional-multicast-00, but there hasn’t been much reaction.

This is a very minimal enhancement to IGMP/MLD snooping.

- There is no change to the underlying L2 forwarding tables required. The same forwarding entries are being created, just with simpler transactions. This is an important constraint. If we could modify the firmware that actually forwards each packet we could just specify a “Threecast” IP header that listed 3 destination IP addresses. But there is no way that will ever happen, we need to deal with switch chips as they are.

- There is no additional state that the switches must maintain, in fact there is less tracking required for a transactional subset multicast group than for a conventional multicast group.

The lack of response presents a challenge. The people who understood multicast did not understand storage, and most of the people who understood storage did not understand multicast.

We could do switch model specific code to shove the correct L2 forwarding rules to any given switch model, but that was not much of a long term solution. The required IGMP/MLD snooping required for this technique can be implmented in OpenFlow, but the number of switches that allow OpenFlow to modify any flow is extermely limited, effectively reducing it to being a “switch specific” solution.

OpenFlow does have the benefit of being constrained. Other local interfaces are not only model specific, they are unconstrained. In order to be able to do anything you effectively have to be given permission to do everything. In order for the network administrator to enable us to set those tables they would have to trust our application with the keys to their kingdom.

As a general rule I hate asking the customer network administrator to do something that I would fire my network administrator for agreeing to.

The Push model solves that because it only sets up multicast groups that are subsets of conventionally configured (and validated) groups, and only for the duration of a short transaction.

We came up with an alternate solution that involves enumerating the possible Rendezvous Groups sufficiently before they are needed that the latency of IGMP/MLD joins is not an issue. The transaction processing then claims that group in real-time rather than configuring it. I’ll describe that more in the next blog. For the balance of this blog I’d like to re-iterate exactly why push multicasting makes sense, and is very simple to implement.

It is also totally consistent with existing IGMP/MLD. It is really just a specialized extension of IGMP/MLD snooping.

To understand why this is benign you need to consider the following issues:

- How is the group identified?

- What are the endpoints that receive the messages?

- What is the duration of the group?

- Who are the potential members of the group?

- How much latency does the application tolerate?

- Are there any Security Considerations

Question 1: How is the Group Identified?

In IGMP/MLD listeners identify themselves. It is pull-based control of membership. The sender does not control or even know the recipients. This matches the multicast streaming use-case very well.

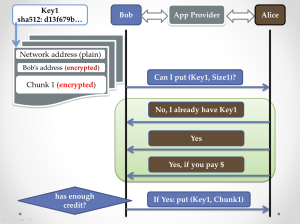

However it does not match a cluster that needs to distribute a transactional message to a subset of a known cluster. One example of the need to distribute a transactional message to a subset of a known cluster is replication within an object cluster. A set of targets has been selected through an higher layer protocol.

IGMP-style setup here adds excessive latency to the process. The targets must be informed of their selection, they must execute IGMP joins and confirm their joining to the source before the multicast delivery can begin. Only replication of large storage assets can tolerate this setup penalty. A distributed computation may similarly have data that is relevant to a specific set of recipients within the cluster. Having to replicate the transfer over multiple unicast connections is undesirable, as is having to incur the latency of IGMP setup.

Two solutions will be proposed where a sender can form or select a multicast group to match a set of targets, without requiring any extra interaction with THOSE targets as long as the targets are already members of a pre-existing multicast group. Allowing a sender to multicast to any set of IP addresses would clearly be unacceptable for both security and network administration reasons.

Question 2: What are the endpoints that receive the messages?

For the specific storage protocol we were working on the desired endpoints are virtual drives, not IP endpoints.

A given storage server can determine which of its virtual drives is being addressed solely from the destination multicast IP address. One of the questions we are seeking feedback on is whether this is unique to this storage protocol, or whether the ability to identify a multicast group as a set of higher layer objects is generally desirable.

Question 3: What is the duration of the group?

IGMP/MLD is designed primarily for the multicast streaming use-case. A group has an indefinite lifespan, and members come and go at any time during this lifespan, which might be measured in minutes, hours or days.

Transactional multicasting seeks to identify a multicast group for the duration of sending a set of multicast datagrams related to a specific transaction. Recipients either receive the entire set of datagrams or they do not. Multicast streaming typically is transmitting error tolerant content, such as MPEG encoded material. Transaction multicasting will typically transmit data with some form of validating signature that allows each recipient to confirm full reception of the transaction.

This obviously needs to be combined with applicable congestion control strategies being deployed by the upper layer protocols. The Nexenta Replicast protocol only does bulk transfers against reserved bandwidth, but there are probably as many solutions for this problem as there are applications. The important distinction here is that there is no need to dynamically adjust multicast forwarding tables during the lifespan of a transaction, while IGMP and MLD are designed to allow the addition and deletion of members while a multicast group is in use. The limited duration of a transactional multicast group implies that there is no need for the multicast forwarding element to rebuild its forwarding tables after it restarts. Any transaction in progress will have failed, and been retried by the higher-layer protocol. Merely limiting the rate at which it fails and restarts is all that is required of each forwarding element.

Question 4: Who are the members of the group?

IGMP/MLD is designed to allow any number of recipients to join or leave a group at will. Transactional multicast requires that the group be identified as a small subset of a pre-existing multicast group. Building forwarding rules that are a subset of forwarding rules for an existing multicast group can done substantially faster than creating forwarding rules to arbitrary and potentially previously unknown destinations.

Question 5: How much latency does the application tolerate?

While no application likes latency, multicast streaming is very tolerant of setup latency. If the end application is viewing or listening to media, how many msecs are required to subscribe to the group will not have a noticeable impact to the end user.

For transactions in a cluster, however, every msec is delaying forward progress. The time it takes to do an IGMP join is simply an intolerable addition to the latency of storing an object. This is especially so in an object cluster using SSD or other fast storage technology. The IGMP/MLD Join might take longer than the actual writing of storage.

Question 6: Are there any Security Considerations

Multicast Groups configured by this method are constrained to be a subset of a conventionally configured multicast group. No datagram can reach any destination that it cannot already reach by sending the datagram to referenced group.

Obviously the application layer has to know the meaning of packets sent on each multicast group, but that is already true of existing multicast UDP.

Proposed Method:

Set the multicast forwarding rules for pre-existing multicast forwarding address X to be the subset of the forwarding rules for existing group Y required to reach a specified member list.

This is done by communicating the same instruction (above) to each multicast forwarding network element.

This can be done by unicast addressing with each of them, or by multicasting the instructions. Each multicast forwarder will set its multicast forwarding port set to be the union of the unicast forwarding it has for the listed members, but result must be a subset of the forwarding ports for the parent group.

For example, consider an instruction is to create a transaction multicast group I which is a subset of multicast group J to reach addresses A,B and C. Addresses A and B are attached to multicast forwarder X, while C is attached to multicast forwarder Y. On forwarder X the forwarding rule for new group I contains: The forwarding port for A. The forwarding port for B. The forwarding port to forwarder Y (a hub link). This eventually leads to C. While on forwarder Y the forwarding rule for the new group I will contain: The forwarding port for forwarder X (a hub link). This eventually leads to A and B. The forwarding port for C.

Many ethernet switches already support command line and/or SNMP methods of setting these multicast forwarding rules, but it is challenging for an application to reliably apply the same changes using multiple vendor specific methods. Having a standardized method of pushing the membership of a multicast group from the sender would be desirable.

The Alternate Method:

But having a product depend upon a feature you are trying to get adopted doesn’t work so well. We needed a method of dynamically selecting Rendezvous Groups that was compatible with terrible IGMP/MLD Join latencies. I’ll explain that in the next blog.